Key Takeaways

- The combination of Volt and Redpanda empowers companies to take action on real-time data by efficiently handling both streaming and processing.

- Volt is an ultra-fast, scalable, ACID compliant decisioning platform for processing, storing and streaming data, while Redpanda implements the Apache Kafka protocol and is designed for demanding, high-volume/low-latency workloads.

- A benchmark demonstrated a sustained workload of 103,092 TPS with an average latency of 15 milliseconds (ms) for US$12.75/hr using US-based Google Cloud servers.

- The benchmark set-up was based on the real-world requirements of writing code to turn messages about mobile phone data usage into line items on customer bills for a tier-1 US phone company.

- Volt and Redpanda combine to provide the best end-to-end latency required for modern applications that require scale, speed, and consistency without compromising on latency, offering a cost-effective solution compared to alternatives like kSQL or Flink.

Table Of Contents

INTRODUCTION

The desire and need to use data in “real time” is driving an evolution from slow, batch-oriented processing to streaming, event-driven architectures.

Fully capitalizing on real-time data means identifying and acting on the moment of “significance”—i.e., the moment of customer engagement or threat prevention—before the chance to monetize it or prevent it from doing damage passes.

To accomplish this, your data platform needs to be able to perform the entire ingest-decide-act sequence with low latency. If not, the chance to act on the data is lost, and so is the revenue (or maybe even the customer).

The combination of Volt and Redpanda empowers companies to take action on real-time data by empowering them to efficiently handle both streaming and processing.

In this first part of our three-part series on how Volt and Redpanda combine to empower companies for real-time data processing, we dig into the details of our first benchmark with Redpanda.

WHY VOLT + REDPANDA?

Volt is an ultra-fast, scalable, ACID compliant decisioning platform for processing, storing and streaming data.

Redpanda implements the Apache Kafka protocol using C++, and, like Volt, is designed for demanding, high-volume/low-latency workloads.

Volt and Redpanda recently conducted a benchmark to demonstrate how you can pair them to solve complex mediation and aggregation problems at scale with a low total cost of ownership (TCO). This makes a significant impact for any company looking to take full advantage of streaming data protocols like Kafka.

Just about any system can produce impressive transactions per second (TPS) by simplifying a problem and throwing near-infinite cloud resources at it. TPS is, of course, very important; But in the real world, if you have to spend outrageous amounts of money to achieve that kind of throughput, it’s probably not worth it; TCO is what ultimately determines success. Our ultimate goal is therefore to demonstrate a series of user-repeatable performance benchmarks involving real-world complexity levels that companies can use to see the value of Redpanda + Volt at a variety of scales and triangulate that with the total cost of such a solution.

This initial benchmark demonstrated a sustained workload of 103,092 TPS with an average latency of 15 milliseconds (ms) for US$12.75/hr using US-based Google Cloud servers — an excellent result considering no meaningful engineering or customization took place. Can we go higher? Absolutely. We aim to achieve 1,000,000 TPS at (hopefully) the same per-event price point, so stay tuned for more announcements.

BENCHMARK SET-UP

Real-world processing isn’t as simple as the powerpoints that get shown at conferences, and not many problems can be solved with a single SQL statement. Volt already has code that performs non-trivial data-driven decision-making like this, and we’re going to combine it with Redpanda to handle complex decisions while maintaining end-to-end low latency and the ability to store huge quantities of data.

Volt-Aggdemo is a demonstration of rules-based record aggregation. Instead of simple time-based aggregation, we maintain a running count of units used by a session, and aggregation happens only when we satisfy one of a series of possible conditions.

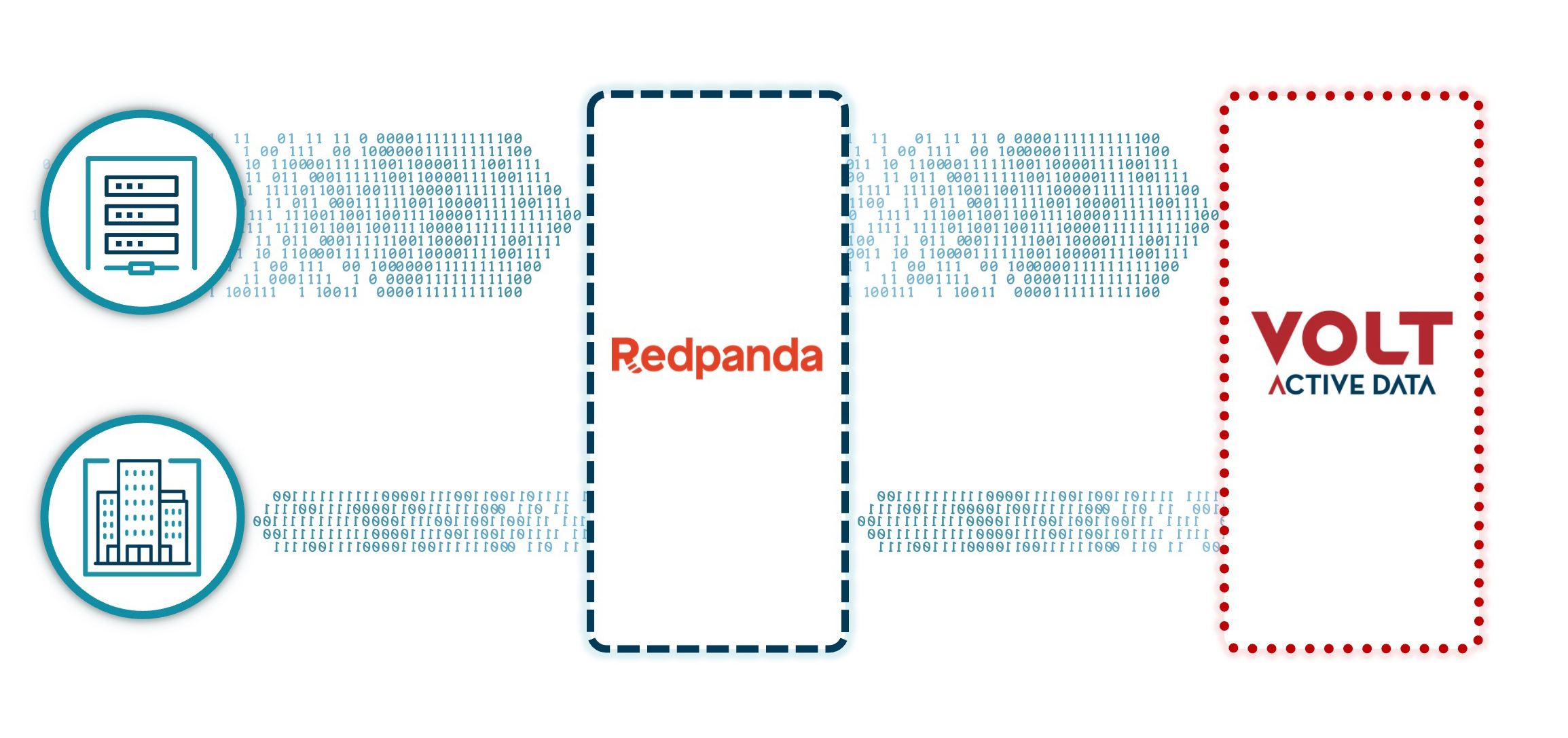

NETWORK SWITCHES CREATED A HUGE TIDE OF RECORDS (TOP LEFT). REDPANDA TRANSPORTS THESE RECORDS. VOLT INGESTS THEM, AGGREGATES THEM ACCORDING TO COMPLEX RULES, AND SENDS THE RESULTS BACK TO REDPANDA FOR DOWNSTREAM USE.

The benchmark set-up was based on the real-world requirements of writing code to turn messages about mobile phone data usage into line items on customer bills for a tier-1 US phone company. The benchmark code itself is open source and can be run by anyone with access to Volt and Redpanda.

The goals of this and the coming benchmarks were to:

- Support 100,000 records per second.

- Process all records received that are up to seven days old. This means sessions with one missing record are processed when the missing record shows up or the session ages out. Under no circumstances do we process a duplicate record.

- Never process a session with a single or a sequence of records missing. Such sessions are reported.

The benchmark also followed these aggregation rules:

- Event-based aggregation: We cut output records when the session ends or when we’ve seen more than 1,000,000 bytes of usage.

- Time-based aggregation: We cut output records when we haven’t seen traffic for a while.

- Quantity-based aggregation: We cut output records when we’ve seen a lot of input records.

- Duplicates and other malformed records we receive are output to a separate queue.

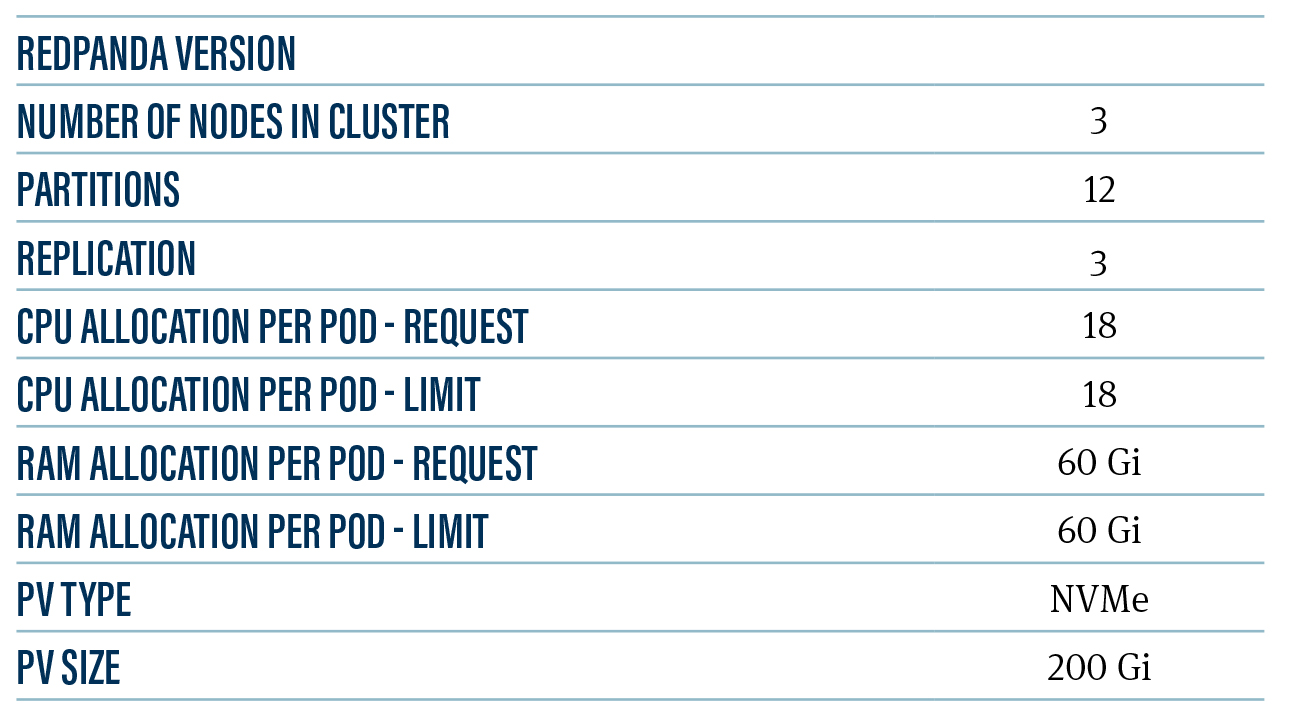

HARDWARE AND SOFTWARE

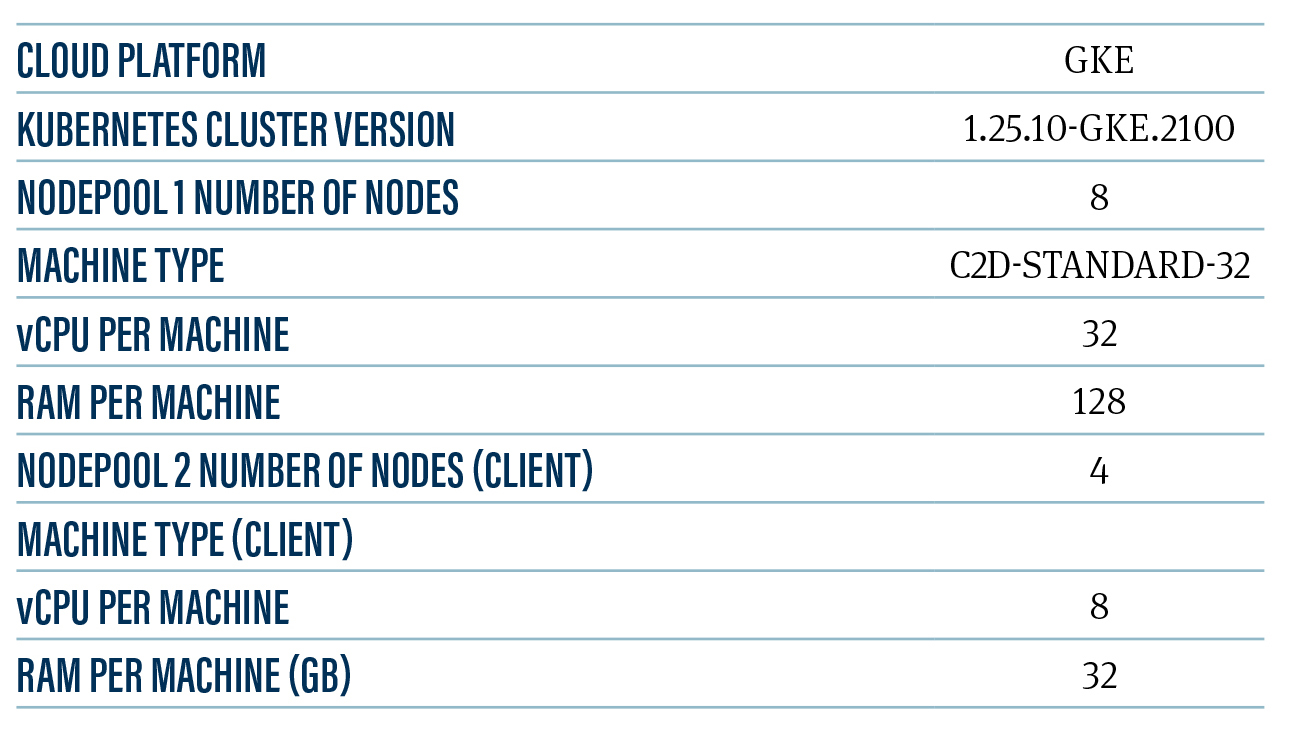

The benchmark ran on a GKE cluster with two sets of node pools:

- A set with bigger servers to host Volt and Redpanda along with their monitoring setup.

- A set with smaller machines to host data generator clients pods.

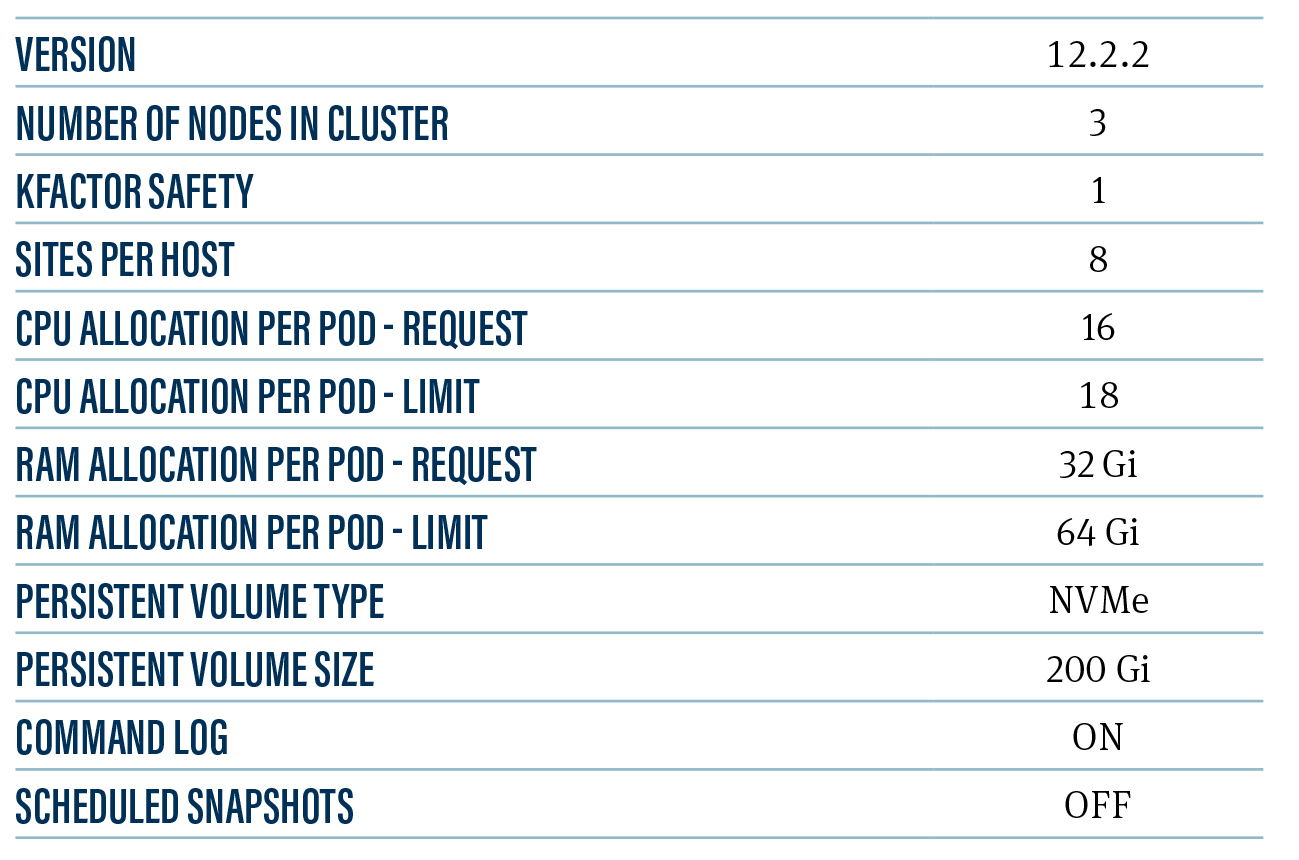

We deployed Volt with 3 nodes, each with 8 physical/16 logical cores. We had a KFactor of 1, or 1 spare copy of everything, which means we had 12 pairs, each with 2 cores. To reach our target of 100K TPS, each core needed to handle 8,333 messages per second.

Redpanda also had 3 nodes and the topics were set up as a replication factor of 3 (i.e. 2 spare copies of everything for high availability purposes). Each node ran 18 logical/9 physical cores. Since we had 3-way redundancy, each node had to support 100,000 events per second, or 100,000/18 cores, which is 5,555 events per second. Linger.ms was set to 1, which ensures each individual message has the lowest possible per-message latency for which to take action.

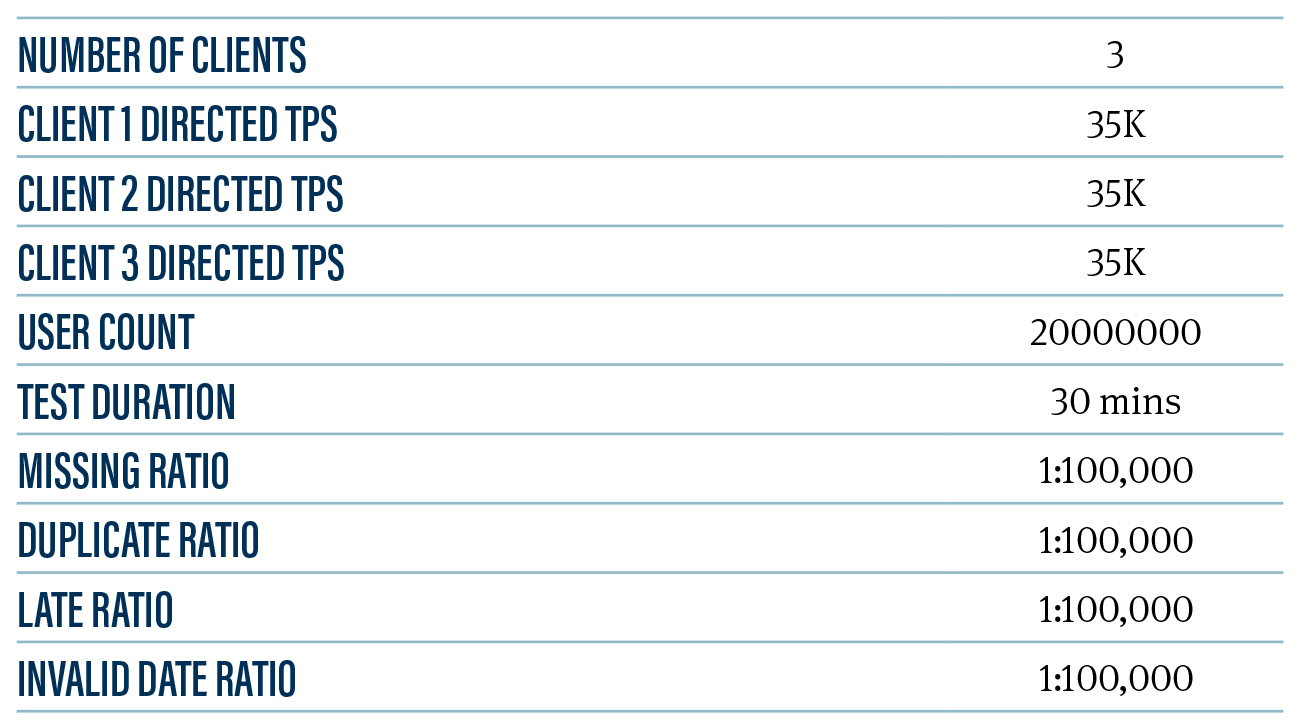

To generate and publish data we ran three client processes at 35,000 TPS each. To read data we ran one consumer for the entire duration of the test.

Kubernetes cluster

For information on setup and configurations, see k8s-volt-aggdemo.

Volt

Redpanda

Test Configuration

For details of what the ratios and other parameters mean, see voltdb-aggdemo.

RESULTS

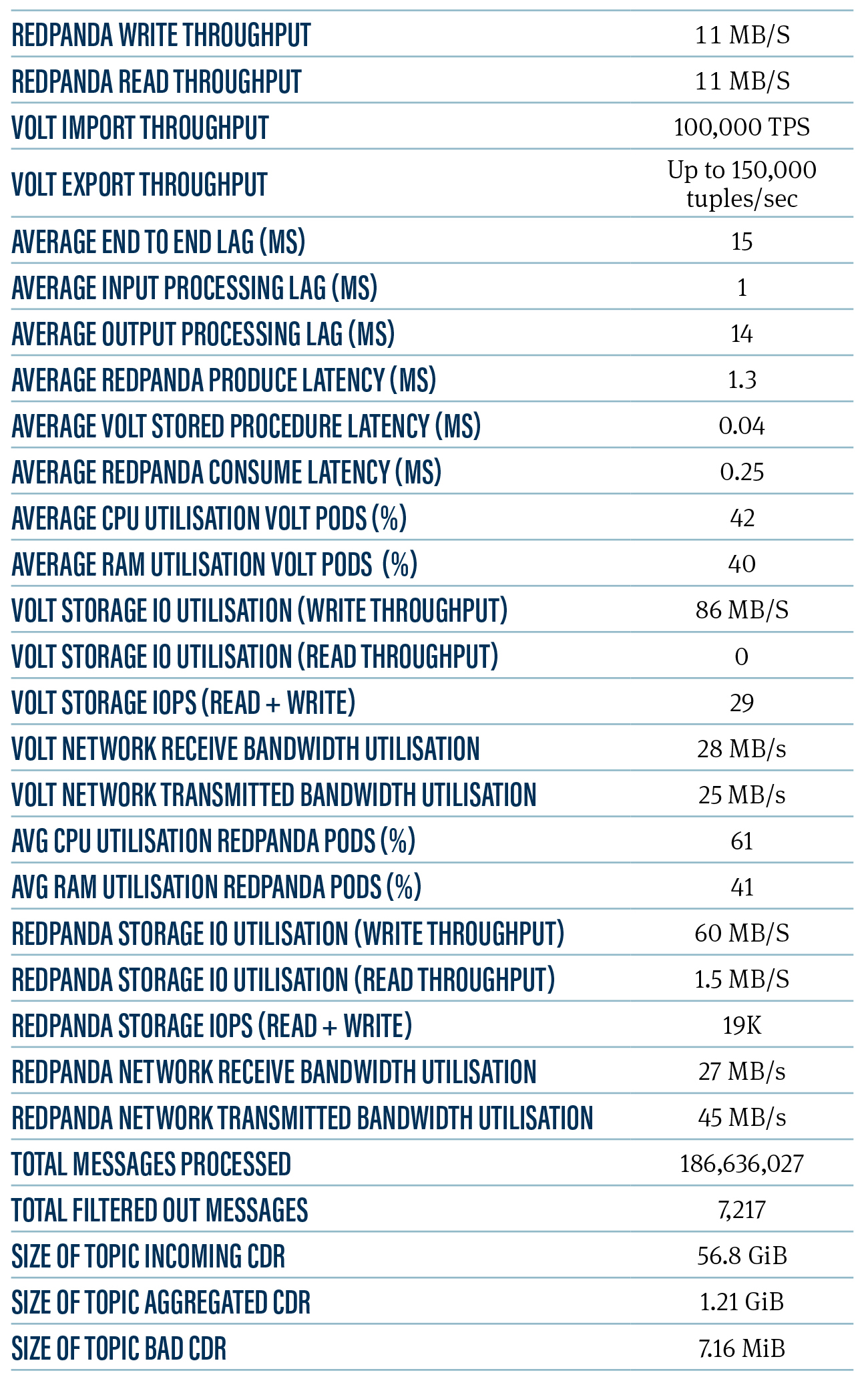

The system was able to sustain a workload of 103,092 TPS and an average 15 ms latency with the following CPU loads:

- Client – 18-20%

- Redpanda – 61.4%

- Volt – 42.5%

We were able to go as high as 172,935 TPS at the expense of latency with the following observed behaviors:

- >80% CPU utilization for Redpanda pods

- >55% CPU utilization for Volt pods

- Average end-to-end latency of up to 3 seconds

Of course, your use case(s) will determine your prioritization. Volt users have traditionally been most interested in ultra low latency, but we also have customers who value throughput more than latency. On the Redpanda side, adding more partitions to scale out more consumers to reduce lag and adding more brokers and/or more resources per broker can be applied, and tweaks on the client side can have significant improvements, too.

Note: The only gating factor we observed was the time we had to run this benchmark. Volt and Redpanda are planning on doing a much bigger one – 1,000,000+ TPS later this year. It’s worth noting that during this workload, the Redpanda producer and consumer latency and the Volt procedure processing latency were all under 1 millisecond each.

CONCLUSION

This benchmark demonstrated that Volt and Redpanda can combine to provide the best end-to-end latency required for modern applications that require scale, speed, and consistency without compromising on latency.

This no-compromise aspect is very important. Kafka’s emergence as the de-facto standard for data transmission has left the issue of how to take accurate and good decisions on Kafka-scale data streams unanswered, with neither kSQL nor Flink being optimal. Volt, when paired with Redpanda, is ideally suited for this.

TCO is also emerging as a factor as cloud costs add up quickly. Redpanda and Volt help with TCO because they are optimized for efficient and scalable CPU and hardware usage.

As for next steps, this benchmark exceeded its goal of 100,000 transactions per second. We believe that there are no known architectural constraints in either product that would prevent 10,000,000 transactions per second with a bigger, more powerful network backend as well as minor configuration tweaks on the clients.

To get started with Redpanda for a simpler, lower-cost and more performant Kafka backend, check the documentation and browse the Redpanda blog for tutorials. To chat with the team and fellow Redpanda users, join the Redpanda Community on Slack.