Key Takeaways

- High availability is paramount due to the shift from human-to-computer to machine-to-machine communication, where devices require rapid and consistent responses.

- Attempting to add high availability to a system after it has already launched is a common pitfall and often reduces availability.

- Gaining executive buy-in is crucial for securing the necessary budget and resources to properly implement and maintain a highly available system.

- Simplicity is key; adding unnecessary components increases the potential for failure and complexity, making the system harder to manage and test.

- Continuous testing and monitoring of response times are essential for identifying and addressing potential issues before they lead to downtime.

In the age of big-data-turned-massive-data, maintaining high availability, aka ultra-reliability, aka ‘uptime’, has become “paramount”, to use a ChatGPT word.

Why?

Because until recently, most computer interaction was human-to-computer, and humans are (relatively) flexible when systems misbehave: they can try a few more times and then go off and do something else before trying again later.

But we now live in the age of machine-to-machine communication. Devices are far less patient than humans and frequently can’t continue to function without an answer. So when they don’t get one, they ask again — and again… and again…

We thus have two compelling reasons for why high availability is so important:

1. Automated systems depend on each other for the rapid responses that enable them to continue working. The failure of one such system will rapidly ripple throughout the entire ecosystem of automated systems, causing widespread chaos and erratic behavior.

2. Once an outage starts, a matching backlog of automatic requests will build up around it. Even if it’s only 10 seconds, that’s a 10-second backlog that will have to be processed when ‘normal service has resumed’. A badly engineered system could fail again in this scenario, or requests could be handled out of sequence. All manner of things can go wrong in ways that are almost impossible to debug.

All of this ultimately leads to your mission-critical applications failing in mission-critical ways that ultimately lead to mission-failing customer churn.

Are we saying your business depends on high availability? In a sense, yes, especially if it runs on applications that can’t afford to lose data or go down.

With that in mind, let’s define what high availability actually means (apart from ‘uptime’).

Table Of Contents

- What is high availability?

- The Two Most Common High Availability Pitfalls

- The Path to Achieving and Maintaining High Availability (ie — our six rules)

- 1. Get executive “buy-in”

- 2. Keep it simple

- 3. Test well and regularly

- 4. Maintain control

- 5. Reconcile modern DevOps practices with the need for stability

- 6. Track and report response times

- Why Volt for High Availability

What is high availability?

So what do we mean when we talk about high availability/ultra-reliability?

All systems have and maintain some degree of uptime — and that’s the key word here: “degree”.

In a large enough system, something, somewhere, will always be broken or go down.The trick is to make sure your users don’t notice, and that’s why high availability is not the absolute concept it may look like at first glance.

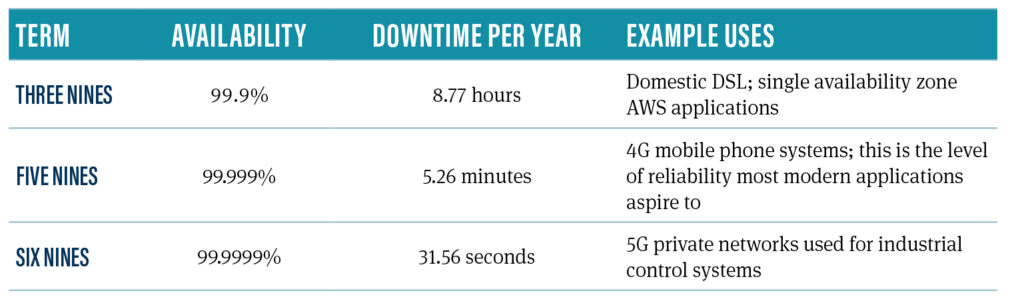

People generally use the ‘nines’ system to describe availability, with the two most common scenarios being ‘three nines’ and ‘five nines’:

With this definition set, let’s now take a quick look at how many companies mess up high availability before they’ve even started trying to achieve it.

The Two Most Common High Availability Pitfalls

There are many ways to get to high availability, but if you’re going to achieve it — and even more importantly maintain it — it’s something you need to bake in from day one of the project.

The most common and obvious path to failure with high availability is trying to make a system highly available after launch. But, as with real-time decisioning, high availability isn’t something you can just tack on, and experience has proven that ‘post-launch’ hacks will just as often reduce availability as increase it. On top of that, you need things like geo-replication to ensure availability even in times of crisis.

The other risk is over-engineering your system into commercial extinction. The cold truth is that ‘extra nines’ of reliability can add ‘extra zeros’ to the budget. While everyone will instinctively assume that they are in the ‘five nines’ space, it’s an assumption that will need to be justified at the CxO level. Don’t wait till the AWS bill comes in before doing this!

Below is a list of six rules that anyone aiming for ‘five nines’ needs to consider, based on what we’ve seen in the field.

The Path to Achieving and Maintaining High Availability (ie — our six rules)

With the above definition and challenges in mind, let’s dig into our six ways to ensure your systems both become and stay highly available,

1. Get executive “buy-in”

This is rule one for a reason. You can propose whatever you want on a whiteboard, but at the end of the day, someone has to pay for it. A disconnect between what the business wants versus what the business is willing to pay for is going to be painful for everybody.

The worst-case scenario for lack of executive commitment occurs when you go live with a system that has lofty goals for availability but doesn’t have the budget or people to do the job properly.

No system runs itself, and you can end up with far lower reliability than a much simpler system because you can’t afford the test and training environments to competently operate it.

2. Keep it simple

Thanks to the magic of open-source and hyperscalers, it’s very easy to rapidly assemble a system from a large number of third-party components. But this can be a mistake.

Every time you add anything to a system you are adding another path to failure, another set of knowledge to learn, and another component that can be subject to a downtime to apply a mandatory security patch.

The Log4J vulnerability is a classic example of an apparently harmless component suddenly becoming problematic. Note that we’re not suggesting you ignore available libraries and write your entire stack by hand. We’re just saying you need to regard adding every additional component as a tradeoff between convenience and risk.

3. Test well and regularly

Be sure to set up a serious testing environment – one with a copy of every piece of equipment you own, in a vaguely-realistic configuration. For example, the Australian phone company Telstra runs a copy of the entire Australian phone system. Expensive? Yes, but not as expensive as bringing the whole system down. This ties back to ‘rule 1’ — you and everyone who works on the project need to understand how everything works, be able to see what happens when you make changes, and generally be 100% comfortable in the virtual world you have created.

If your co-workers’ response to testing is, “that would be too complicated”, then it might be appropriate to point out that if the system is too complicated to replicate as a working model, then, a la rule 2, maybe you need to simplify it? d

4. Maintain control

This may sound a bit crazy, but if you’re going to own the latency/availability SLA, then you need to ‘own’ as much of the call path as possible. What you own, you control. Every time the control flow of your code goes into something you don’t control, you lose control of latency, and could – a couple of milliseconds later – blow past the latency requirement in your SLA. Using asynchronous programming also helps here, as when calls never return you can generate a generic ‘busy signal’ response that always seems to go down better than silence.

5. Reconcile modern DevOps practices with the need for stability

We used to rarely upgrade software. Now, thanks to DevOps, people are pushing releases weekly, even daily.

But this approach may not be helpful if your overriding goal is minimal downtime. Aside from the obvious disruption caused by deploying upgrades, every time you deploy a change you risk causing an outage. Let’s assume a 1% chance of a 15-minute outage per deployment. If you do 50 releases a year, that’s an annual average of 7.5 minutes of deployment-induced downtime, which means you can no longer meet a ‘five nines’ SLA, which implies less than six minutes per year.

Like the other points in this list, this is not an absolute, but it’s something you need to consider, and you should have a plan that reconciles the different forces in play here.

6. Track and report response times

The last rule is fairly obvious. You can’t control what you can’t measure, especially if you have to surrender control to a third-party API or web service. Sooner or later, one of your dependencies will misbehave and you will be the visible point of failure – so you will need to show the failure is elsewhere.

The time to instrument the code is when you write the application — not during an outage. You also need to track not just customer-facing times, but also internal times, especially when working with microservices. How you do this without adding complexity and slowing things down is another challenge. Sampling every thousandth event, for example, might give you a degree of visibility without bogging the system down.

Why Volt for High Availability

Building an ultra-reliable data platform involves deep executive commitment followed by rational expectations, careful design choices, and ongoing hard work. Skipping steps, trying to do things ‘on the cheap’, or assuming you can make it highly available later on are key pitfalls to avoid.

The best question you can ask is, “What’s the simplest way we can meet this need?”.

The Volt Active Data platform is designed to let you make lots of reliable decisions, very quickly, at scale. It’s an integral part of critical national infrastructure and phone systems in multiple countries around the world. Reliability, deterministic behavior, and minimal downtime are hallmarks of what we offer. Volt is reliable both in the context of individual clusters and global networks of active-active clusters

Volt will continue to function with the loss of a node because all work and state is managed by at least two nodes. This means that losing a node is an annoyance, but not a problem, as there will always be a surviving node that can do the work and remain fully up to date.

Even if you lose an entire cluster, Active(N) ensures your workloads keep running with zero configuration changes or downtime, which means the minimal possible impact on end users. It’s what we do.