Key Takeaways

- An ‘out of the box’ Volt configuration can easily hit just under 500K transactions per second (TPS).

- Volt scales in a near-linear manner, especially from a TCO perspective.

- At less than US$2.50/hour per 100,000 TPS, Volt is highly competitive.

- A public repo with the scripts used to run YCSB has been created, making it possible for interested parties to run the benchmark themselves.

- The latest YCSB results demonstrate the cost-effectiveness of Volt at scale on Amazon.

Volt has been involved with the Yahoo! Cloud Serving Benchmark (YCSB) for some time. Every now and then we re-run the benchmark to see how we’re holding up. We recently re-ran it against newer Amazon hardware and discovered that:

- An ‘out of the box’ Volt configuration can easily hit just under 500K transactions per second (TPS).

- Volt scales in a near-linear manner, especially from a TCO perspective.

- At less than US$2.50/hour per 100,000 TPS, we are highly competitive.

We’ve also made it possible for interested parties to run this benchmark themselves by creating a public repo with the scripts we use to run YCSB.

For the purposes of this iteration, we focused on seeing how well it would scale and how that impacted TCO. Historically, people talk about YCSB in the chest-beating context of how “product X is marginally faster than product Y”. By adding TCO as a factor, we think we’ll make our numbers ‘real’ and allow people evaluating technology to make better choices. We’re also going to describe our configuration in enough detail to allow you to reproduce our results.

Table Of Contents

CONFIGURATION

YCSB consists of several workloads, labeled ‘A’ to ‘E’. The one that’s of most interest to us is ‘A’, which consists of a 50:50 ratio of reads and writes to a Key Value store. Volt was never written as a Key Value store but works really well as one. Since the big strength of Volt is its ability to handle high-volume streams of writes, it’s a logical test case for us to focus on.

Another change we made from previous YCSB tests is instead of picking a single configuration and maxing it out, we kept stepping up the configuration and hardware until we ran out of time and budget. We repeatedly re-ran the test with an increasing number of YCSB threads. YCSB threads are an imperfect way to scale, as instead of requesting a target number of transactions per second you add threads, with each thread running as fast as it can, all of which happens in a single Java Virtual Machine (JVM). This means you need an increasingly large server to run the client, and in the AWS universe, you can find yourself wondering whether the network can cope with a single client full of YCSB threads.

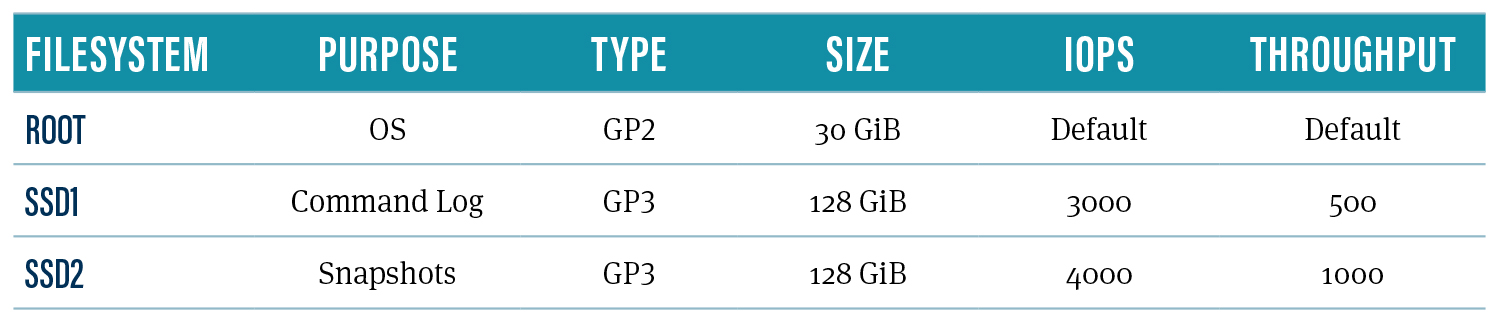

The base component of our configuration was as follows:

An AWS c5.9xlarge server with the following filesystem. c5.9xlarge has 36 logical cores (18 physical) and 10 GiB networking.

We found this configuration perfect for the benchmark, but we had to update the client that ran the YCSB threads as volumes increased.

Note that with the exception of workload ‘E’, which saturates networks, we have yet to hit a limit on what Volt and YCSB can do.

RESULTS

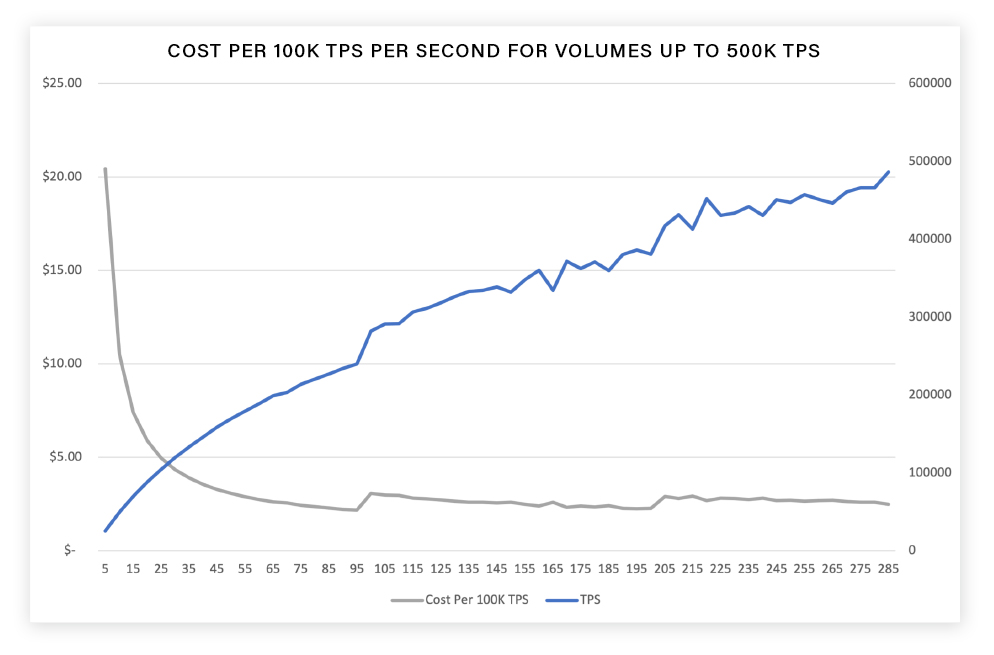

Traditionally, people show a graph demonstrating linear scaling of inputs/outputs. Our graph is slightly different. We show how cost per transaction initially drops, and then remains more or less constant as volumes increase to 500,000 read/write transactions per second. The blue line is TPS, which is determined by the number of YCSB threads we run. The grey line is the price per transaction, which initially plummets as we go from zero TPS to about 60,000. We then see upticks as we move from 3-node to 5-node to 7-node clusters.

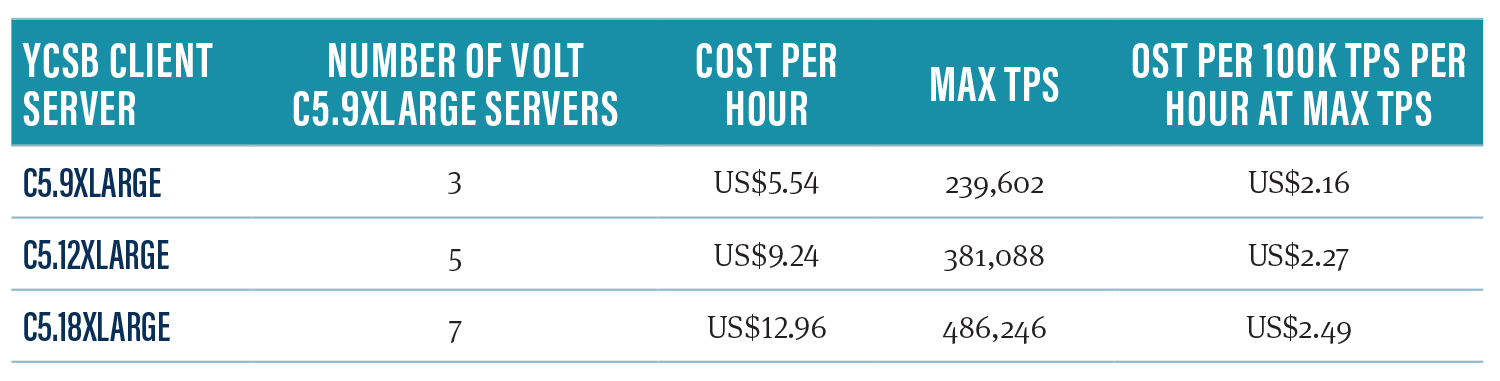

An alternative way to look at this is what we got for each configuration:

Notes:

- Server prices are Dublin region spot instance prices.

- 99th percentile latency never went beyond 2.799ms

- We peaked at running 285 YCSB threads. We may be approaching some kind of internal YCSB limit.

CONCLUSION

We believe this latest YCSB is significant because it shows the cost-effectiveness of Volt at scale on Amazon. We encourage you to try it out for yourself and see if you can reproduce these results. Many data platform promise performance and throughput, but very few can offer this at a reasonable TCO.

If you’d like more information or have any questions, please contact us.