Key Takeaways

- Volt Active Data is effective in supporting fast data applications across various industries.

- 5G technology promotes high-speed real-time applications, making it a suitable area to benchmark Volt Active Data.

- The benchmark aimed to demonstrate Volt Active Data's linear scalability by scaling a transactional application horizontally.

- The benchmark setup involved using Google Cloud Platform, custom networking, and tools for automation, data population, and metric gathering.

- Volt Active Data's throughput scales linearly with the number of nodes, achieving over 3 million transactions per second on a 27-node cluster, while maintaining relatively constant latency.

Since joining Volt Active Data exactly 3 years ago as a Sales Engineer, I have worked with customers from different industries and with different fast data challenges. One of my most important duties here has been to relay the efficacy of Volt Active Data in supporting such fast data applications. The challenge here is in not just applying a solution to the customer’s problem at hand, but often to also not lose sight of the possibilities that Volt Active Data, as probably the fastest database in the market, can enable in the respective industries.

5G

Perhaps no other technological revolution that is upon us promotes high-speed real-time applications as 5G. To show everyone that such applications are what Volt Active Data was built for, we decided to benchmark Volt Active Data on an Online Charging Application. To manage complex charging policies for the increasingly prevalent and diverse connected devices that people will own, one can see why Telecom charging needs to grow along both the dimensions of scale and complexity while not losing the consistency guarantees.

Higher levels of complexity will generate pressure on data processing systems

Higher levels of complexity will generate pressure on data processing systems

Benchmark

Our goal from the benchmark is to demonstrate linear scalability of Volt Active Data when scaling a transactional application horizontally. After experimenting with AWS and Docker, we chose to run the benchmark on Google Cloud Platform for two reasons — synchronization of clocks on the Compute instances never being an issue, and the ease of building infrastructure as code using Python and Jinja templates.

David Rolfe — a colleague from Dublin — kindly built me the Online Charging application that I used to run the benchmark. The highly transactional workload primarily manages the user balances and enables the purchase of new services.

To run the benchmark, I built a Volt Active Data instance from clusters of sizes 3, 9, 18, and 27 each and pounded it with requests from client machine clusters of equal size. My goal was to ensure that sufficient workload was generated on the Volt Active Data cluster so that it wasn’t over- or under-utilized. A 5 minute warmup allowed the cluster to settle into a stable performance profile after which the benchmark was allowed to run for 10 more minutes and the final metrics were gathered.

Environment and Setup

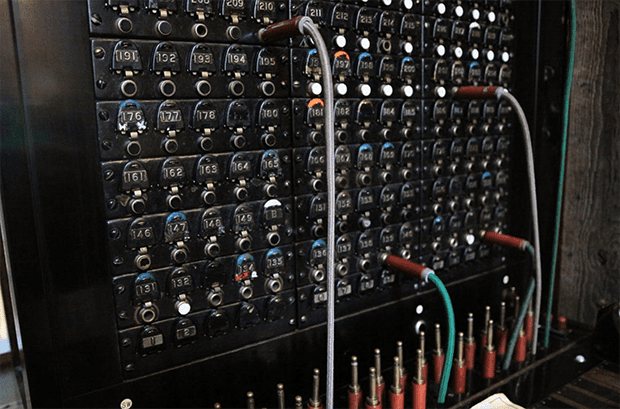

With the application built, I spent most of my time building the tooling to do the automation around the provisioning and instantiation of the deployments, triggering of various workflows such as data population, workload generation, and the gathering of metrics at the end.

All the compute instances were on the same subnet on a custom internal network with a NAT gateway to allow the instances to download artifacts and dependencies from the internet. Firewall rules allowed me to access the Volt Active Data Management Console to monitor the database and verify the deployment.

Starting the database on a cluster was pretty straightforward since Volt Active Data can form a cluster simply by using hostnames (Redis doesn’t allow that BTW). All one needs to do is to issue the start command with the host parameter.

‘voltdb start — host=server1,server2,server3, …’

Also, there is no need to install Zookeeper or any external clustering software for running Volt. The only dependencies are Python and Java.

Volt Active Data’s Python client came handy as part of the initialization scripts to trigger actions based on the state of the database. We used Volt Active Data’s Java client for the main benchmarking application since it comes with client affinity, thread-safety for parallel connections, asynchronous connections, backpressure, and topology awareness to name a few. Along with these features, the ability to write complex logic on the database server as SQL+Java stored procedures allows the application clients to stay simple and scalable.

Results and Report

After bulk loading the initial data, the application runs the workload for 5 minutes as warmup and to allow the system to reach a steady state. After a 10 minute run duration, the metrics of throughput, and 3 9s and 2 9s latency are captured. We observed that Volt Active Data’s throughput scales linearly with the number of nodes in the cluster while the cluster latency remains relatively constant. We observed a peak performance of over 3M transactions per second running on a 27-node cluster.

Find the full benchmark report. Your critique and comments are welcome here!