Key Takeaways

- Volt Active Data processes billions of transactions daily, supporting various innovative applications such as call approvals, mobile game event processing, road race tracking, smart grid management, casino bet validation, and credit card fraud detection.

- The shift from batch processing to real-time processing is crucial, with Volt Active Data capable of handling high throughput for streaming data from sources like IoT sensors and mobile devices.

- SQL remains a dominant force, and Volt Active Data continues to enhance its SQL capabilities with features like windowing analytic SQL, geospatial SQL support, and real-time materialized views.

- Building a production-ready operational database requires high availability, correctness, predictable latency, durability, and performance, all of which Volt Active Data rigorously tests through error randomization and independent testing.

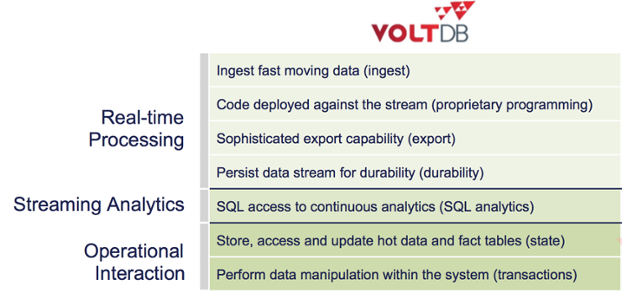

- Streaming analytics and operational data stores are converging into real-time platforms, with Volt Active Data integrating real-time ingestion, streaming analytics, and fast in-memory storage to enable fast data applications.

Billions and billions of transactions are executed each and every day by production-deployed Volt Active Data applications.

A back-of-the-napkin calculation puts the number north of 50 billion a day. The number is likely much, much higher. These billions of transactions are executed across a variety of innovative applications, applications not practical, or sometimes even possible, to build a decade ago. Chances are that you have touched Volt Active Data – without knowing it – today!

- When you call someone on your cell, there’s a good chance a Volt Active Data application is approving the call.

- If you have played some of the top 10 mobile games within the past few years, a Volt Active Data application likely processed your in-game events.

- If you’ve run a road race recently, there’s a good chance a Volt Active Data application tracked your progress.

- If you live in the UK and turn on a light switch, you are interacting with Volt Active Data as your electrical smart grid uses Volt Active Data to authorize and process grid and meter events.

- If you play baccarat in a casino, there’s a good chance the bets placed and movement of chips on each gaming table is validated by Volt Active Data via in-chip RFID technology.

- If you bought something in China with your credit card, it is likely that your transaction was verified as valid or detected as fraudulent by a Volt Active Data application.

The Volt Active Data engineering team has been building the world’s fastest in-memory database for over eight years now. It’s been a fun as well as challenging journey and we’ve learned a lot in the process. Some of the more notable learnings have been:

Batch is so 2000s. Legacy applications are moving from batch processing of feeds to real-time processing. New applications are being built to ingest, transact, and compute analytics on data streaming from all types of sources, such as IoT sensors, mobile devices, logs, etc. The world is moving to real-time and turning to products like Volt Active Data, which has the capability to handle high throughput (thousands to millions of events per second).

SQL rules the roost. Volt Active Data was founded around the same time as the NoSQL movement. At the time, building a distributed SQL data store seemed contrarian, given the NoSQL hype. Fast forward a half-dozen years and we now see many of the more popular NoSQL products offering SQL or SQL-like interfaces. Even the Hadoop ecosystem has added SQL interfaces to the data lake. SQL is here to stay (and, in fact, never left).

Volt Active Data’s commitment to SQL remains strong. Over the past year we’ve added windowing analytic SQL, geospatial SQL support (points and polygons), continuous queries via real-time materialized views, the ability to join streams of data to compute real time analytics, and the ability to very quickly generate an approximate count of the number of distinct values. More advanced SQL capabilities are made available nearly every month.

Expect the unexpected, operationally. When you build a highly-available, clustered database that runs 24×7 at high throughput, on bare metal or in the cloud, all types of error scenarios that could happen, do happen. Disks fail, networks break down, power goes out, mistakes are made, lightning strikes.

Building a production-ready operational database is tough work. The bar is set high and not negotiable. The following features are table stakes for current and next generation applications:

- High availability: Machines, containers and virtual machines can and do break, crash or die. Networks pause or partition. The database must handle these situations properly.

- Correctness: Accuracy matters. Every client should see a consistent view of the data.

- Predictable latency: Response time should be predictable, within 99.999% of the time.

- Durability: Never lose data even in the face of nodes crashing or networks partitioning.

- Performance: The database needs to scale with your application. Adding additional nodes should scale throughput smoothly and linearly.

To maintain these requirements across all scenarios, Volt Active Data Engineering constantly pounds on the database in our QA lab. We focus on randomizing errors of all types. The more we learn, the more we feel we need to test more. That’s one of the reasons we reached out to Kyle Kingsbury, (aka Aphyr), to independently apply his dastardly Jepsen test suite and methodology to Volt Active Data. As part of this effort, we found a few issues in the product before they caused issues in customer deployments. This effort remains one of the team’s proudest accomplishments of 2016.

But we know there’s always more work to do, and we’re committed to doing it.

Streaming analytics and operational data stores are merging into a new real-time platform

The architecture required for real-time processing of streaming data is evolving rapidly. When we started building Volt Active Data, a developer had to cobble together numerous pieces of technology, (e.g. ZooKeeper, Kafka, Storm, Cassandra), to process real-time streams of data.

Volt Active Data Founding Engineer John Hugg stated at a recent Facebook @Scale conference, “OLTP at scale looks a lot like a streaming application.” Today we’re seeing the result of that evolution: a convergence of real-time ingestion, streaming analytics, and operational interaction into a single platform. While creating the next generation of database, the Volt Active Data engineering team has assembled the core components for building streaming fast data applications in Volt Active Data:

- Real-time ingestion with in-process, highly-available importers reading from Kafka, Kinesis and other streaming sources;

- Streaming analytics on live data via ad hoc SQL analytics as well as continuous queries; and

- A blazingly fast in-memory storage and ACID transaction execution engine capable of accessing hot and historical data to make per-event decisions millions of times per second.

The Volt Active Data engineering team is not done building (or learning)! Our mission is to make building highly-reliable, high throughput fast data applications not only possible, as it is today, but also extremely easy. So stay tuned. Over the coming months we’ll be delivering additional geo-distributed and high availability features, additional importers and exporters for building fast data pipeline applications, and improving our real-time analytical SQL capabilities.