Key Takeaways

- Fast data and big data ecosystems are not competing, but mutually beneficial and necessary for businesses.

- Fast data enables real-time, context-aware decisions based on customer data, enhancing customer experience and enabling new business models.

- Fast data systems operationalize the learning and insights derived from big data, leading to smarter customer engagement and improved business results.

- Combining fast data with big data makes information more actionable and beneficial, allowing for better user experiences and informed decision-making.

- Businesses should develop a fast data strategy, including identifying opportunities, assessing infrastructure, understanding alternatives, agreeing on success criteria, and prototyping solutions.

I recently joined Volt Active Data as the lead Solutions Architect responsible for helping prospects, customers and partners in the West Coast and Central US. Most recently before joining Volt Active Data, I was part of the professional services group at one of the three major Hadoop vendors where I was involved in both pre-sales and post sales work. I have been actively involved in Big Data, architecting and implementing various open-source projects in the Hadoop ecosystem, since the year 2010.

Over the last few months, working with businesses who chose to address their fast data needs, I have experienced at first hand the transition from batch systems to real-time systems. It is great to be fast, but to derive an insight from piles of data is a very hard problem to solve. For businesses, how fast you act on a customer’s data to make a contextually relevant decision is as important as an insight derived from analyzing Big Data.

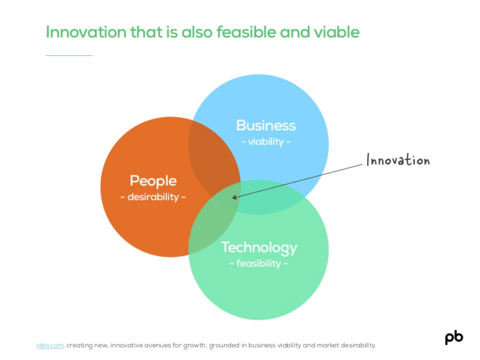

So as the title suggests – are the fast data and big data ecosystems competing with each other, as did David vs. Goliath, where for one to succeed the other must fail? I don’t believe so. Let me try to convince you, by the end of this post, that the interplay between these two distinct ecosystems is not only mutually beneficial but also necessary.

“When I am getting ready to reason with a man, I spend one-third of my time thinking about myself and what I am going to say and two-thirds about him and what he is going to say.”

– Abraham Lincoln

In the year 2010, Apache Hadoop (which had its roots in Nutch and a few whitepapers from Google) was barely four years old; only a handful of Web 2.0 companies had adopted it to index the whole web and make it searchable. In the year 2010, the original team that developed Hadoop was still part of Yahoo, and Cloudera, a young startup, was trying to compete with Amazon and still figuring out what its product should be. In the intervening years, I have seen Hadoop evolve into a much wider platform where businesses ranging from life sciences to financial services to manufacturing and many more sectors have modernized their infrastructures to realize its OpEx/CapEx benefits.

The global Big Data market is projected to reach $122B in revenue by 2020. By 2020, every person online will create roughly 1.7 megabytes of new data every second of every day, and that’s on top of the 44 zettabytes (or 44 trillion gigabytes) of data that will exist in the digital universe by that time. In a recent Computerworld article, University of California Berkeley Professor Vincent Kelly predicted that more than a trillion devices would be in our world within the next 10 years. Frost & Sullivan forecasts global data traffic will cross 100 Zettabytes annually by 2025.

As the physical and digital worlds converge, it’s nothing short of the datafication of life. It will continue to grow at record rates and will overwhelm our ability to manage effectively this amount of data. With a trillion devices on the way – the volume, velocity and variety of data is going to grow dramatically.

Yes, organizations are amassing larger and larger datasets from a growing number of sources. However, the ability to process this data has not kept pace. It’s a massive business opportunity but also a significant potential threat to businesses. In an increasingly data intensive world your business is only as fast and effective as your competitor’s ability to process its data to give real-time actionable insights. Here’s where the concept of “fast data” steps up to the plate.

Competitive edge – Fast Data gives your business wings

Modern consumers of online content have become used to personalized product recommendations, personalized deals and even personalized communications. Companies like Amazon, Netflix and Ebay have paved the way for the modern web experience where customers are not only accustomed to tailored content but also expect hyper-personalization to get the best experience.

Hyper-personalization is the name of the game for businesses in which data plays the key role to provide a personalized and targeted service along with tailored content to enhance customer experience. Being able to act on a customer’s data in real-time and make contextually-aware decisions is very valuable to businesses. Businesses should use these opportunities to consistently target the right audience throughout the customer lifecycle. It not only aids in giving the customer a better experience, but also enables new business models such as pay as you go, which are becoming crucial in the current on-demand sharing economy.

Fast data or data in motion is very different than big data or data at rest. Fast data applications analyze and make decisions on data as it is received in real time to have the single biggest operational impact and benefit. Mike Gualtieri, a principal analyst at Forrester Research, reports that the value of fast data is perishable. It exists in the moment and can have exponentially more value than data at rest, whereas the value of big data increases with time. Processing data at breakneck speeds requires these two technologies to work in sync with each other: a fast data system that can handle developments as quickly as they appear (preferably in a transactional fashion), and a data warehouse capable of analyzing data periodically to derive insights.

A fast data system can support real-time analytics and complex decision-making in real time, while processing a relentless incoming data feed. As complicated as this system seems, it’s an absolute must for anyone looking to compete, particularly in the enterprise space. An example has been cited by Volt Active Data partner Emagine International’s CEO David Peters, who says “Subscribers receiving tailored real-time offers bought 253% more services.”

Fast Data systems operationalize the learning and insights that companies derive from “big data”. Smarter customer engagement with real time, context-aware applications have direct impact on business results. Businesses like Emagine tap Fast Data to provide personalized content to its customers, enabling those users to gain competitive advantage through more efficient and agile operations.

Changing economics of Data

Search has changed our behavior. It has made the once-arduous process of finding and retaining information fast and fluid. As we try new terms, we stumble upon unexpected ideas and perspectives, which may take us off in new directions. There are no barriers to entry and no rules. Think of the economics of data the same way. It changes not only the scope and volume of data we can process, but also the way in which businesses think about data in the first place.

Hadoop redefined the economics of big data, reducing the per-terabyte cost by more than 95%. When cost becomes a non-issue, adoption always soars. Smart organizations are using these new economic rules to make big data services available to anyone who needs them – but big data is only as useful as its rate of analysis. Otherwise, businesses won’t gain access to the real-time suggestions and statistics necessary to make informed decisions with better outcomes. When fast data is combined with big data, information becomes more plentiful, more actionable and more beneficial to an organization. Fast data is the way forward to operationalize the insights we get from analyzing terabytes of data and providing a better user experience.

Fortunately, the falling cost of DRAM – approximately 30% every 12 months – has made using fast data an affordable option for just about any organisation. Modern fast data products, unlike methods that may be classified as forms of supercomputing, are distinct in that they can be run on commodity hardware. More enterprises are investing in fast data using In-memory computing, and the market continues to produce an expanding range of solutions. In fact, a recent survey within the financial services industry revealed that 58% of firms use in-memory technologies for real-time analytic applications, and 28% reported that they are used in a mission-critical capacity.

Overcoming cultural resistance

Most companies that have big data installations in production realize the idea of big data is less about size and more about introducing fundamentally new business processes to decision-making. This requires information within companies that has traditionally been siloed to be available across functional organizations. Typically this results in an uphill political battle that organizations have to constantly fight to re-architect business processes and make sure data governance/security mechanisms in place are robust. Information in a data lake is often buried in detailed unstructured data logs, and various ETL techniques are needed to extract insights from it. The value derived from the analytics piece can greatly exceed the cost of the infrastructure. To leverage big data, it is vital to have talented data engineers, data scientists, statisticians, project managers and product specialists working in tandem.

The ROI from big data deployments of various companies across domains is evident, but it takes a lot of effort and time to materialize as it requires a cultural shift in the way companies operate. In spite of concerted efforts, most early adopters of big data have realized only 15% of the ROI from their big data installations. Analyst firm Gartner has reported, “Through 2018, 90% of the deployed data lakes will be useless as they are overwhelmed with information assets captured for uncertain use cases.” Fast data, unlike big data which requires highly specialized skills such as R and data science, is much more agile and typically requires commodity skills such as SQL and Java. This ensures very quick turn around time for business and enables rapid prototyping of new features in products, which plays well with the fail-fast engineering culture. Businesses typically realize revenue rather quickly from their fast data investments and also are to react quickly to competition, as it requires lesser effort to operationalize changes to the platform.

Most people in the business world are at least somewhat familiar with the concept of Big Data, yet relatively few are familiar with the term ‘fast data’. I am seeing many companies across the board, ranging from Fintech to IOT/manufacturing to Ad-tech, beginning to innovate with and leverage the potential of fast data. It is tough not to draw parallels with the early days of big data, as there is a lot of excitement around fast data and in-memory computing. Perhaps the reason fast data has taken a back seat to big data in mainstream conversation is because the two are so conceptually intertwined that it’s assumed one follows the other. Businesses are rushing to allocate massive budgets to drive insights from big data without a proper strategy to operationalize these insights. I believe that eventually, all businesses will compete on their ability to make decisions “in the moment” using Fast Data.

Here are the five steps I propose as part of your fast data strategy:

- Identify your fast data opportunity

- Assess and leverage your existing infrastructure

- Understand the (business implications of) alternatives

- Get agreement on success criteria for project

- Prototype, pilot, refine

Here is link to a detailed guide to walk you through the process.

So, despite what the legend says, in this case, David and Goliath get to live happily ever after in their own worlds, periodically interacting as necessary.